The Crosby Street Hotel and the Chelsea Pines Inn, New York City

In 1999, at the peak of the dot com bubble, Epinions.com came online. The site allowed regular people to post reviews of consumer products like cameras, books, and toys.

It is difficult to imagine now, but at the time this was a novel idea, and it attracted a lot of interest. The New York Times described the site as a “marketplace for ideas”, and said that the company had become a “lightning rod for talent” in Silicon Valley.

“Epinions” are now pervasive on the web and have become a major factor in consumer decision-making. We rely on non-professional reviews to decide where to dine (Yelp), what household goods to buy (Amazon), what to read (Goodreads), what hotels to stay at (TripAdvisor), and even what doctors to choose (Healthgrades). User reviews have contributed to people making better informed decisions about how to spend their time and money. They have also increased incentives for everyone from restaurant owners to TV makers to improve the quality of their products and services.

But aggregating user reviews does not always produce accurate results. TripAdvisor and Yelp provide us with deliciously definite claims about how places compare to each other, like “#1 in New York City” (a designation that TripAdvisor gives to the hotel pictured at right; more on that later). This post will show that these claims are frequently wrong, especially for hotels and restaurants.

#1 in NYC?

There are 3 reasons that user reviews fail to produce accurate results & favor some kinds of hotels over others.

Incentives

Who writes reviews — and why? These are the key questions in assessing the reliability of sites like Yelp and TripAdvisor. If certain groups of people are more likely to write reviews, or certain types of experiences are more likely to prompt someone to write a review, the results may be skewed in favor of certain types of places.

We know that reviews tend to be written by people who have had either an exceptionally good or bad experience. This results in a lot of 1- and 5-star reviews. The median customer — someone who had an “OK” experience and who has nothing particularly positive or negative to say — tends to be underrepresented; some fire in the belly is an effective inducement for someone to spend time posting a review. This is not necessarily a problem, however. It does mean that reviews are often full of vitriol and effusive praise. But as long as places attract 1- and 5-star reviews in proportion to how much they deserve them, their overall ratings should accurately reflect customers’ experiences.

The problem is that users frequently write these 1- and 5-star reviews for reasons that are entirely irrelevant to future customers’ potential experiences. Negative reviews, in particular, penalize some kinds of establishments disproportionately to others.

The nightclub penalty

A good example is the “nightclub penalty” on TripAdvisor. This affects hotels that are attached to popular clubs or bars, especially those with a “selective door” (a euphemistic expression used, especially in New York, to refer to bouncers turning away people who are ugly, poorly dressed, or who arrive in a group that has too many men and not enough women).

People often write damning reviews of a hotel they haven’t stayed at because they had a bad experience at its nightclub.

Such hotels are more likely to receive a high number of 1-star reviews than their peers. This is not because they are bad hotels — or because their nightclubs are noisy and cause discomfort to guests. Instead, the reviews are posted by disgruntled revelers who get turned away at the door. Many have not even overnighted at the hotel. A classic example is someone who posted a 1-star review of the Ritz in London 82 because she was wearing jeans and wasn’t allowed into the hotel bar; she thought an exception ought to have been made because hers were by Armani.

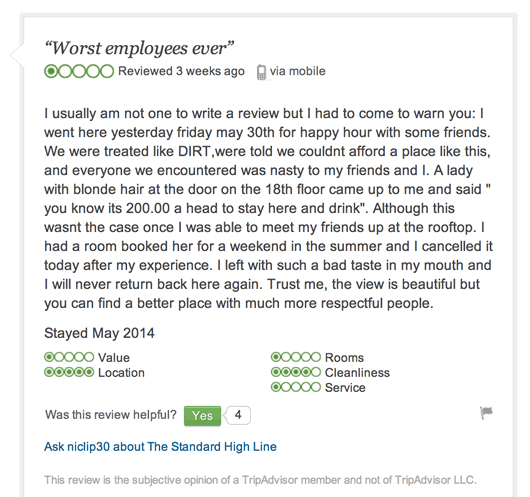

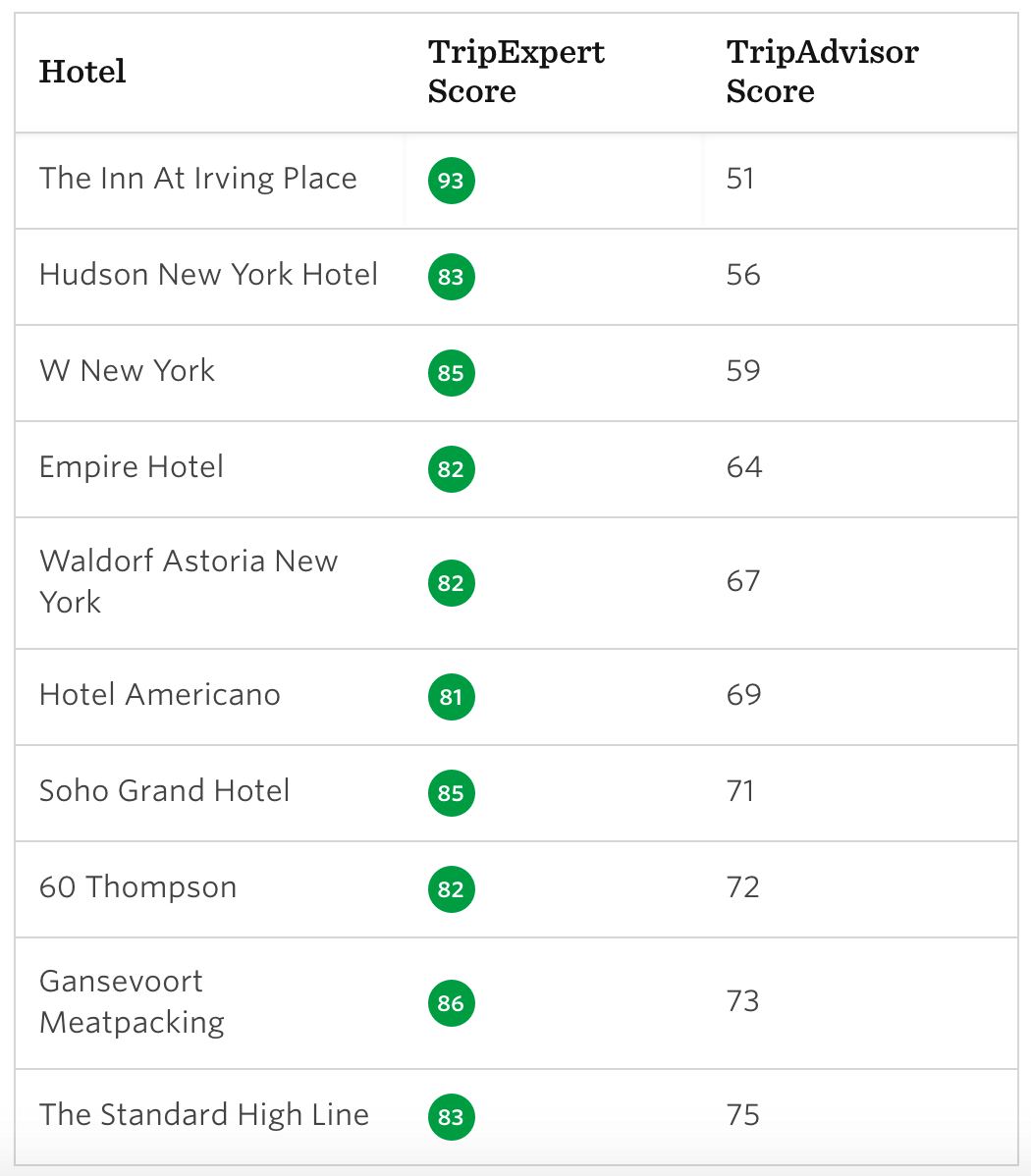

For a more in-depth example, consider The Standard High Line in New York. It has a high TripExpert Score; Fodors calls it “one of New York’s hottest hotels” and, as Travel + Leisure notes, virtually every room has stunning views. It’s not perfect; we rank it #51 in New York, and many of the negative reviews on TripAdvisor make valid points about its shortcomings. But its TripAdvisor score, 75, is strangely low, putting it in the bottom half of TripAdvisor’s ranking of New York’s approximately 440 hotels. It’s apparently worse than a Comfort Inn on the Lower East Side (#172) and an Econo Lodge in Times Square (#218). Why? At least part of the reason is that people go on TripAdvisor to complain about how they have been treated by the bouncers or the wait staff at Le Bain, one of the city’s hottest nightlife destinations. The following review is representative:

Just in case you (understandably) didn’t read the whole extract: the reviewer admits to never having stayed at the hotel.

If all hotels had popular nightlife venues, this would not present a problem for a review aggregator. But obviously they do not. Using TripExpert data to provide a baseline for comparison, we can explore the effects of the nightclub penalty. The following table shows all hotels in New York that score very well on TripExpert (> 80) but poorly on TripAdvisor (< 75). 6 out of 10 of the hotels (the shaded rows) have nightlife venues with “selective doors”. All 6 have reviews on TripAdvisor that consist of complaints about treatment by bouncers or maitre d’s. In other words, they have all taken a nightclub penalty.

The nightlife penalty is broader than its name suggests. It can apply to any establishment that has ancillary facilities frequented by non-customers, like golf courses, beaches, casinos (“rip off casino“), and so on. Some reviews even penalize hotels on the basis of their experiences at nearby population centers (“the nearby town is terrifying“).

The service penalty

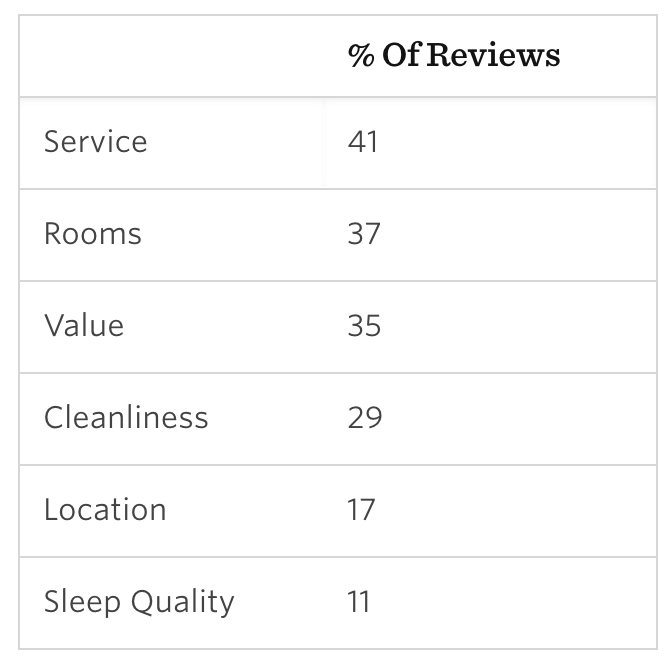

Rejection by a bouncer at a nightclub is just one example of an event that is likely to prompt someone to take the time to log on to TripAdvisor and write a scathing review of a hotel. To investigate what else triggers negative review writing, we read 1,000 one-star reviews. (We selected 100 hotels at random from TripAdvisor’s 447 listings in New York and for each of them read the 10 most recently posted 1-star reviews.) We used TripAdvisor’s own rating categories to classify the reasons that the user awarded the hotel such a low score. These are the results:

Claims of bad service contribute to 1-star reviews more than any other factor. Over 40% of the 1-star reviews that we read included a complaint about the service. Luxury hotels are especially prone to such complaints; for 5-star hotels, the figure increases from 41% to 46%. Almost half of 1-star reviews of luxury hotels complain about service. Frequently, they say the service is rude or arrogant. This review of the St. Regis, one of New York’s great hotels, is like a lot of others we encountered:

Of course, hotels should be penalized for stuffy, unpleasant, and brusque service. But by how much? Is service really more important than the comfort of the rooms or the convenience of the location? A survey of travelers conducted by TripAdvisor suggests that service is actually the least important major factor:

When asked what makes a hotel great, 30 percent of respondents said location is the most important factor, while 29 percent cited comfortable beds, and 24 percent said hotel staff/great service.

This means that there is a mismatch between what is important to travelers and what reviewers write about on TripAdvisor. Bad service (or the perception of bad service) has an effect on a hotel’s rating that is out of proportion with how much people value good service.

This means two things. Most obviously, it means that hotels with bad service will get penalized more than they deserve. But the overrepresentation of negative reviews about service probably also works to the detriment of certain kinds of hotels. The average guest of a no-frills motel has less contact with hotel staff than the average guest of a luxury resort. The motel has fewer staff overall and fewer services that require interaction with staff (no 24-hour concierge, no towel boy at the pool, no spa attendant, etc.) The motel guest is therefore less likely to be brushed the wrong way and end up posting a review complaining about the snootiness or brusqueness of the staff.

Service is important. But it’s only one of many factors that influence how much we enjoy a hotel stay. In negative TripAdvisor reviews, other factors like location and comfort are often not given the attention they deserve. Location, according to the survey the most important contributor to a good hotel, was mentioned in only 17% of the reviews in our 1,000 review sample.

The high expectations penalty

While analyzing 1-star reviews on TripAdvisor, we encountered a lot of guests who were disappointed because their stay did not live up to expectations. Frequently, reviews such as these are posted by people who resent having paid a lot of money. This is not necessarily bad: value for money is obviously something that is important to most travelers, and it’s only fair that expensive hotels are held to a high standard. But people have different tolerances for price, and user ratings make it impossible to filter out the effect of this high expectations penalty. Say that you’re planning a trip of a lifetime — a honeymoon in Rome — and price is no object. If you go with the TripAdvisor #1, you’ll be staying at the Appia Antica Resort. This is what your room will look like:

I’m sure this is a nice enough hotel, and having read all 91 reviews on TripAdvisor, I have no doubt that the staff are very friendly and helpful. You might even find the stickers on the wall above your bed wishing you good night in six different languages to be a quaint touch.

But you won’t be staying in the best hotel in Rome. Not even close. That would be Hotel de Russie, a “neoclassical landmark” (concierge.com) with “exquisite terraced gardens” (Lonely Planet), positioned right on the Spanish Steps. Hotel de Russie ranks #93 on TripAdvisor, so unless you scroll through four pages of results, you won’t even see it. Why does it perform so badly? If you sort reviews so that those with the lowest ratings appear first, you’ll get the answer. Here are the headlines of the first few:

Hotel de Russie

- • “E575 for a double room for double use: waaay over-priced”

- • “Too expensive, staff too posh, nice garden.”

- • “Simply not worth the money”

- • “What a joke and a complete disappointment”

- • “Overpriced – Laundry list of complaints”

- • “waist of money”

- • “De Russie is a Disappointment”

Hotel de Russie has been penalized for being expensive and for disappointing guests who expected a better experience given how much they paid and given the hotel’s reputation. As expensive as it is, however, Hotel de Russie is not the 93rd best hotel in Rome. A far better approach to dealing with hotels in different price categories is to isolate price from the review methodology and rank hotels regardless of how expensive they are — and then let users filter based on their budget. We can make our own decisions about how much we are prepared to pay for a particular occasion; those decisions should not be made for us by other users’ notions of what is good value for money.

Fake reviews

No-one knows how many reviews on Yelp and TripAdvisor are fake; some estimates are as high as 40%. We do know that the incentives for restaurant and hotel owners to increase their scores is substantial.

Hotels and restaurants can (and do) game the system by posting fake reviews.

A UC Berkeley study showed that an increase of half a star resulted in a restaurant’s chance of selling-out during prime dining time increasing from 13% to 34%. A Harvard Business School study estimated a 1-star increase improved revenues by between 5-9%.

We also know that it’s easy and cheap to buy fake reviews. Outsourcing websites like Fiverr and cloud workforce platforms like Amazon’s Mechanical Turk have been used by hotels and restaurants to buy fake reviews for as little as 25 cents each.

Can fake reviews be weeded out automatically? TripAdvisor and Yelp talk up their clever algorithms that can supposedly identify suspicious reviews and either delete them or prevent them from having an effect on an establishment’s score. These algorithms will improve over time, especially as the sites apply machine learning techniques to their growing amount of data. But with such strong financial incentives involved, nefarious hotels and restaurants (or their far-removed subcontractors) will correspondingly devote a significant amount of time and money to figure out how to trick the system. Some of the factors that these algorithms take into account are obvious and their effects can be circumvented. For example, extreme reviews attract suspicion, so a hotel that has a 3-star rating can post a lot of 4-star reviews.

If all hotels posted fake reviews at the same rate, the practice might not have a detrimental effect on overall ratings and rankings. But it is likely that some kinds of properties are more prone to do so. This is because the risk to the reputation of a luxury hotel chain like the Four Seasons if it were found posting fake reviews is considerable. But a family-owned boutique would find it comparatively easy to keep the practice under wraps — and even if someone found out, the story would hardly be newsworthy.

Demographics

The people who post reviews on Yelp and TripAdvisor are not demographically representative. Unfortunately, there are no reliable statistics about who contributes to these sites by posting reviews. We do, however, know about their overall user base.

Some groups are overrepresented on review sites, which skews results in ways we don’t yet fully understand.

Do demographic differences such as these make a difference? Although we can’t tell for sure, it’s easy to see how they might. Women, for example, are more health conscious than men (significantly more follow a diet) and are much more likely to be vegetarian or vegan (79 percent of vegans in the US are female). These characteristics probably affect how restaurants that cater to these preferences rank relative to those that do not.

The Cinnamon Snail food truck

The Cinnamon Snail food truckEarlier this year, Yelp released a list of the top 100 places to eat in the United States. Coming in at #1 was Da Poke Shack, a casual but undeniably outstanding seafood restaurant in Hawaii. Spend some time with the list, however, and you will notice some curious inclusions. For example, according to Yelp, the best place to eat in New York (and the 4th best in the country) is an organic vegan food truck called “The Cinnamon Snail”. In one of the world’s great culinary hubs, with a total of 86 Michelin stars and a dynamic and inventive food culture, is the best food really being served out of a truck?

Maybe. Or maybe “The Cinnamon Snail” is the beneficiary of a demographic that is overrepresented among Yelp reviewers: cost-conscious 20- and 30-somethings who prefer organic food, who are much more likely than the general public to be vegan, and who like the novelty of discovering a great food truck and sharing the experience with their social network. There may be worse things than a tyranny of vegans. But it is nonetheless a tyranny.

The alternative

Crowdsourced opinions about hotels and restaurants can be useful. If you have the time and patience to read hundreds of reviews posted about a hotel on TripAdvisor, you’ll probably get a good sense of what it is like and whether it is a good match for you. But the point is that most people don’t read every review; instead, they rely on the overall score awarded to the hotel and how it ranks relative to others that they are also considering. It is this approach that is problematic. It is subject to abuse by hotel owners who can post glowing reviews of their own properties and damning reviews of their competitors’. It suffers from a self-selection bias because the people who have the greatest incentives to post reviews are often those who have had some kind of experience that is not relevant or is only marginally relevant to other travelers. It systematically favors certain kinds of establishments over others. And, most importantly, these problems will not be resolved as more and more reviews are posted; they are built into the system.

We launched TripExpert because we think it is an important for people to have an alternative to user reviews. Our data comes from people whose job it is to review hotels: writers for Lonely Planet, Frommer’s, Travel + Leisure, and so on. By aggregating reviews from 20 different publications, we produce a TripExpert Score that accurately tracks hotel quality.

Aggregating expert opinion is not a new idea — Rotten Tomatoes and Metacritic have been doing it for movies and TV shows for years — but it has never before been applied to physical establishments like hotels, restaurants, bars, and tourist attractions. We trust professional movie critics to help us decide what films to watch, which is a much more personal and subjective matter.

We now also have a tool for marshalling the expertise of thousands of professional reviewers in making travel plans.

— @tripexpert